All product names, logos, and brands used in this post are property of their respective owners.

As of 2021, I am pleased to announce that Microsoft has released a native Amazon S3 Connector for Power Automate! This is a very exciting and long-awaited development (in my opinion). I have not been this excited about a Flow connector since the original SFTP Connector!

At the time of writing, the native S3 connector is “read-only” - this means Power Automate can only use it to retrieve data FROM Amazon S3 storage buckets. It cannot “write” data TO S3 (yet). In this post, I will describe the basic configuration required to allow Power Automate (Flow) to access AWS S3 buckets.

Note: If you need write access to AWS S3 from a Power Automate Flow, look at my post covering Couchdrop.

Amazon S3 (and IAM) Setup

The Amazon AWS setup is relatively simple. I recommend creating 1 API user (IAM) per S3 bucket to keep things compartmentalized and secure.

Assuming you already have a bucket set up, let’s look at setting up the IAM user. This user’s credentials will ultimately be added to the Amazon S3 connector in Power Automate.

Browse to the IAM console in AWS

Select Users -> Add Users

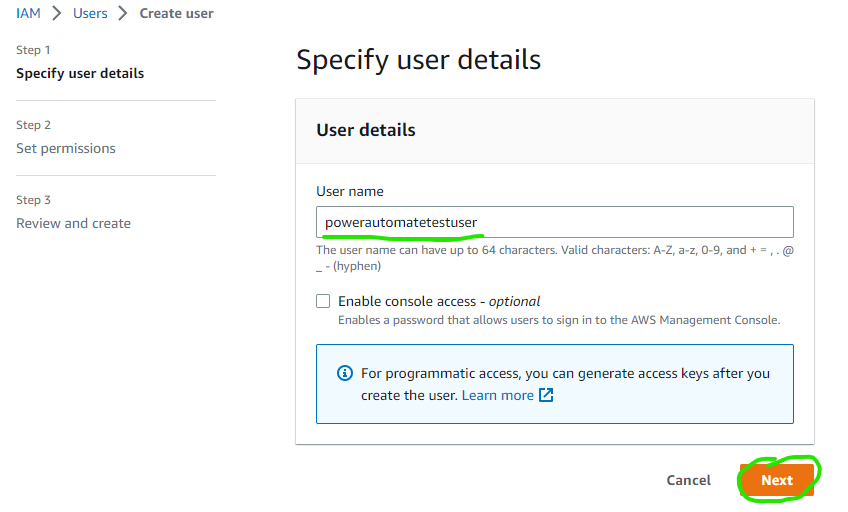

Enter a username of your choosing, leave the default options, then click Next

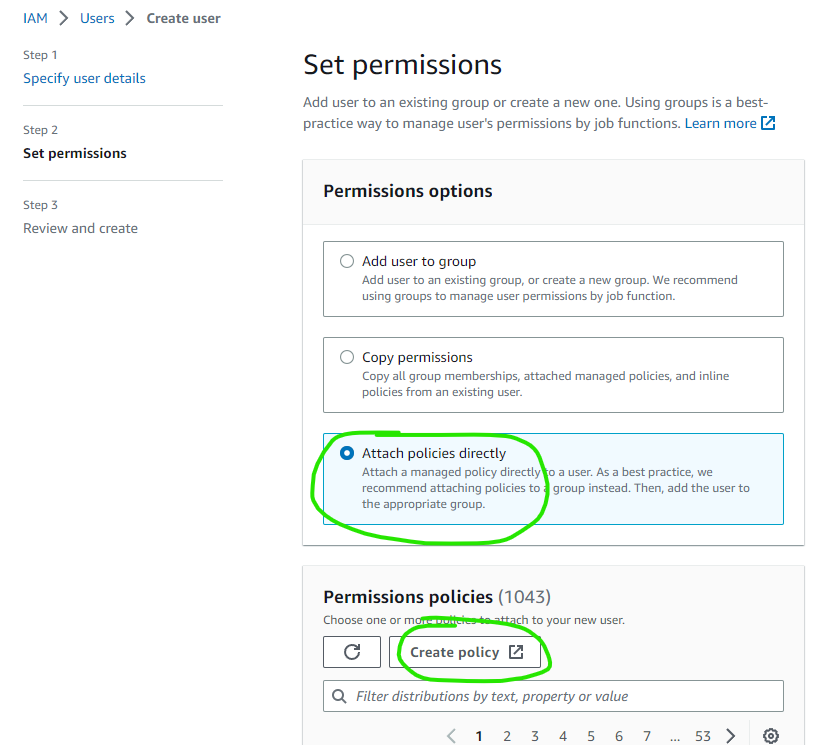

Select the Attach existing policies directly option, then click the Create Policy button

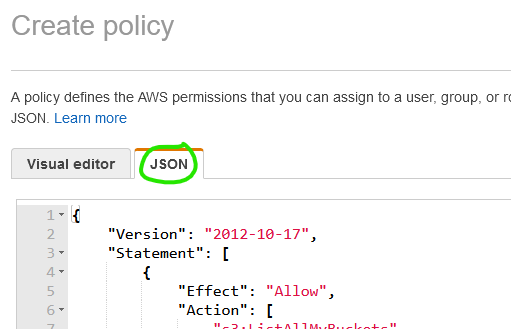

In the new window, select the JSON tab

I used a slightly modified version of one of Amazon’s examples. Since the Power Automate AWS S3 Connector is “read-only,” I wanted to ensure the AWS IAM user had the same read-only access to the bucket (for security reasons). For reference, the name of the bucket this policy grants read access to is neilsawesomepowerautomatebucket:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:ListAllMyBuckets"

],

"Resource": "arn:aws:s3:::*"

},

{

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketLocation"

],

"Resource": "arn:aws:s3:::neilsawesomepowerautomatebucket"

},

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:GetObjectAcl"

],

"Resource": "arn:aws:s3:::neilsawesomepowerautomatebucket/*"

}

]

}

Click Next: Tags, add tags if desired, then click Next: Review, name the policy, then click Create policy

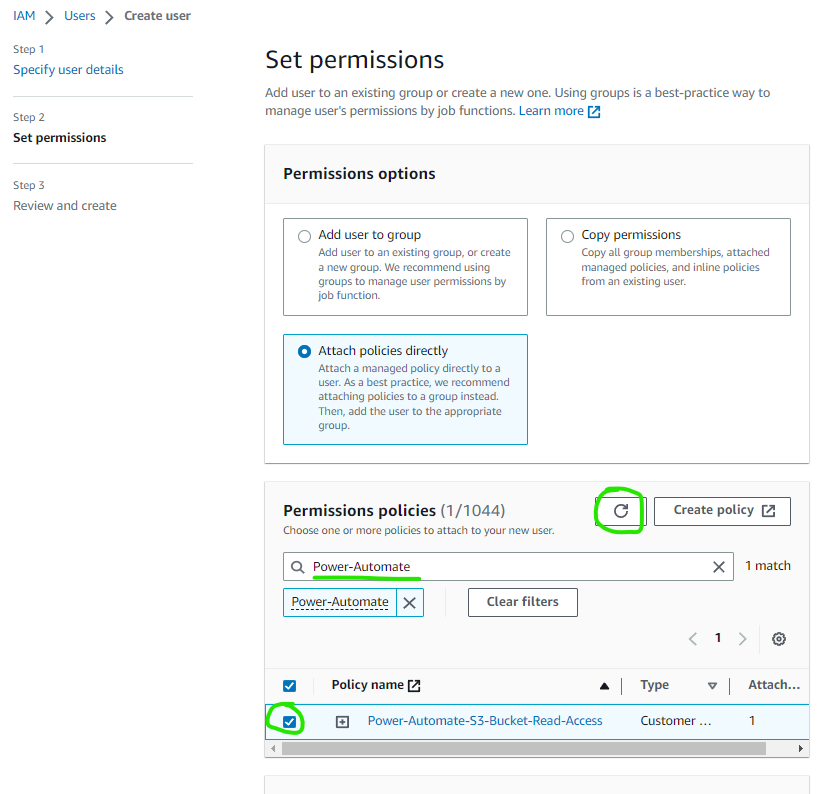

Once the policy is created, return to your original IAM window or tab, click the refresh button above the policy list, and use search to find the policy you just created - tick the box next to the policy and click Next

Add tags as appropriate and then Create user

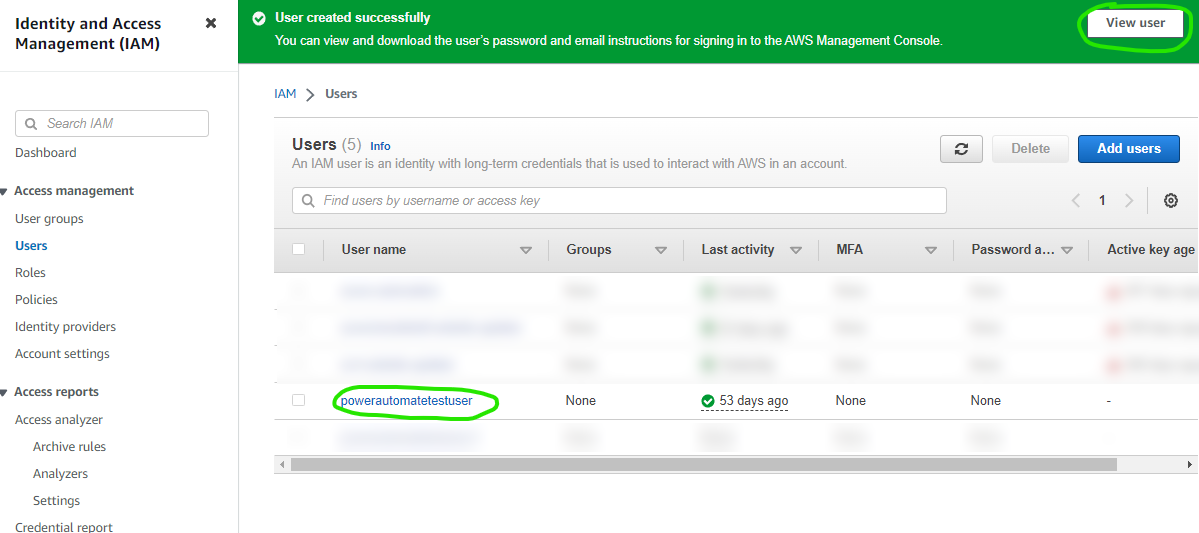

View the new user you created using the button near the top of the screen or by clicking the User name in the list

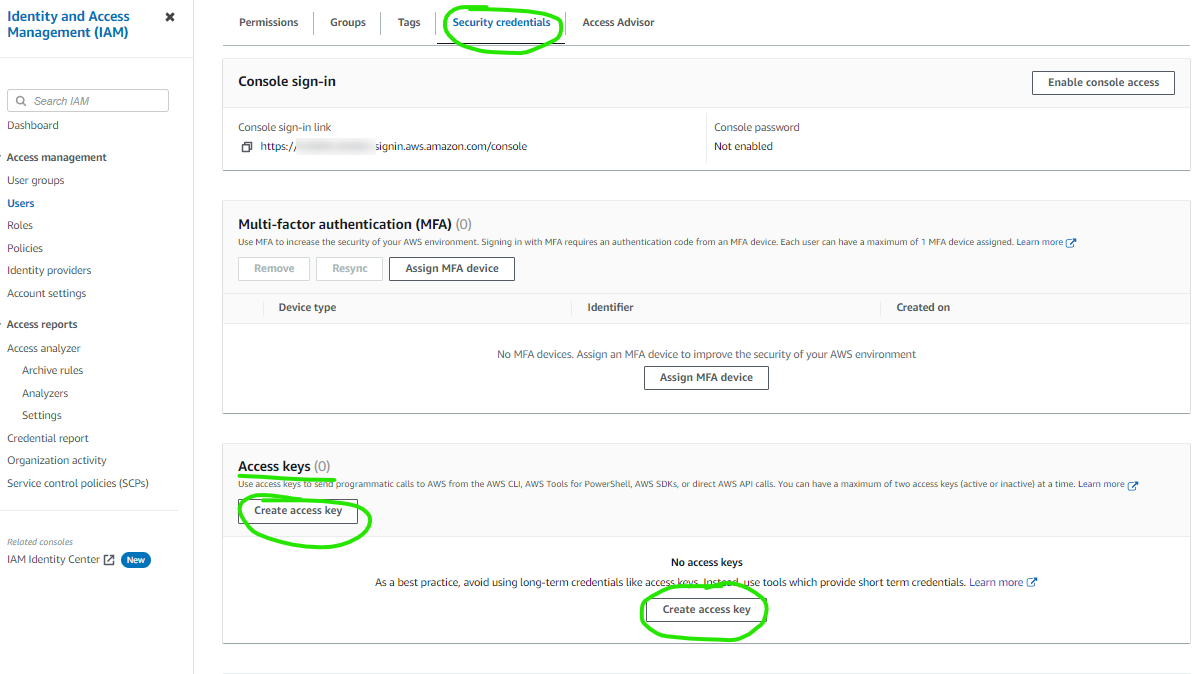

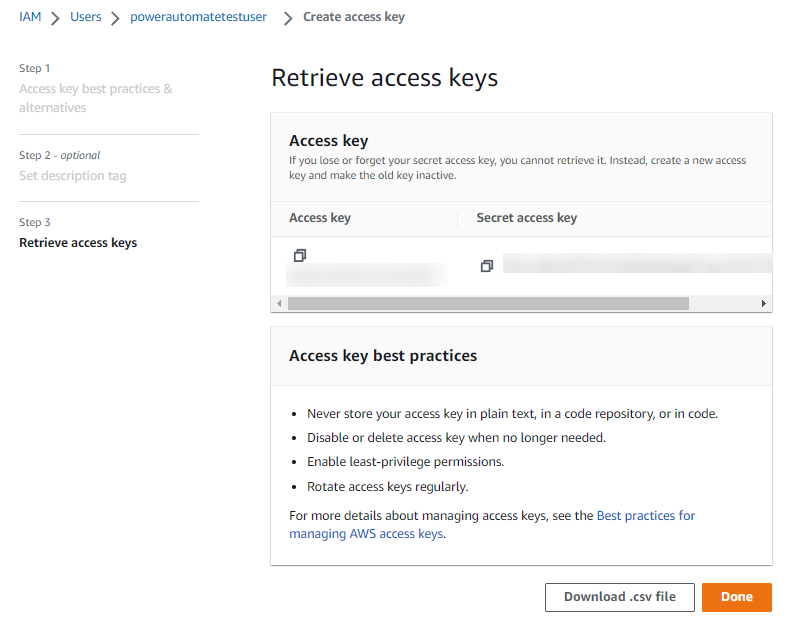

On the user screen, click the Security credentials tab, scroll down to the Access keys section, and click Create access key. Select the Application running outside AWS option, click Next, then click Create access key. Capture the Access key and Secret access key values - you will use these in the Power Automate setup later

Microsoft Power Automate (Flow) connector Setup

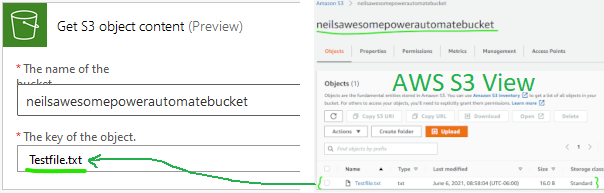

The PowerAutomate (Flow) setup is even easier than the AWS setup. We will configure Power Automate to connect to the S3 bucket (neilsawesomepowerautomatebucket) using the IAM user we created above and retrieve the contents of a text file.

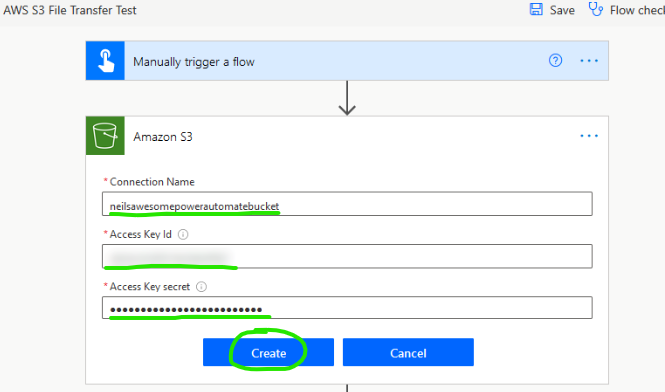

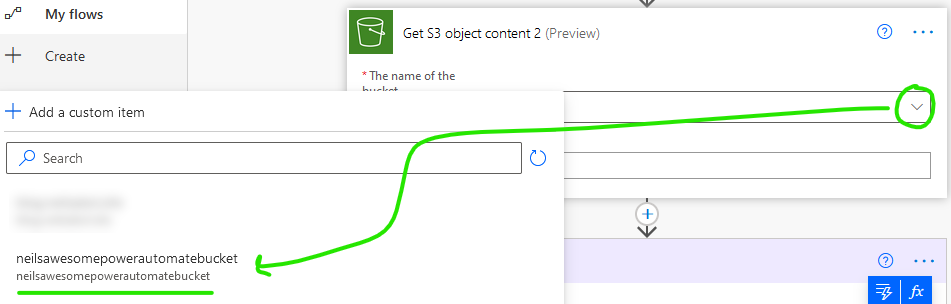

Add a Get S3 object content Action to your Flow (using the Power Automate Editor) and create a new Amazon S3 Connector

Configure the connector as follows (add a Connection Name of your choice and paste the Access Key Id and Access Key secret from AWS IAM)

If everything is configured properly, you should be able to select your bucket from the dropdown and specify the key (name) of a file in the bucket. I used Testfile.txt, which I pre-staged in S3.

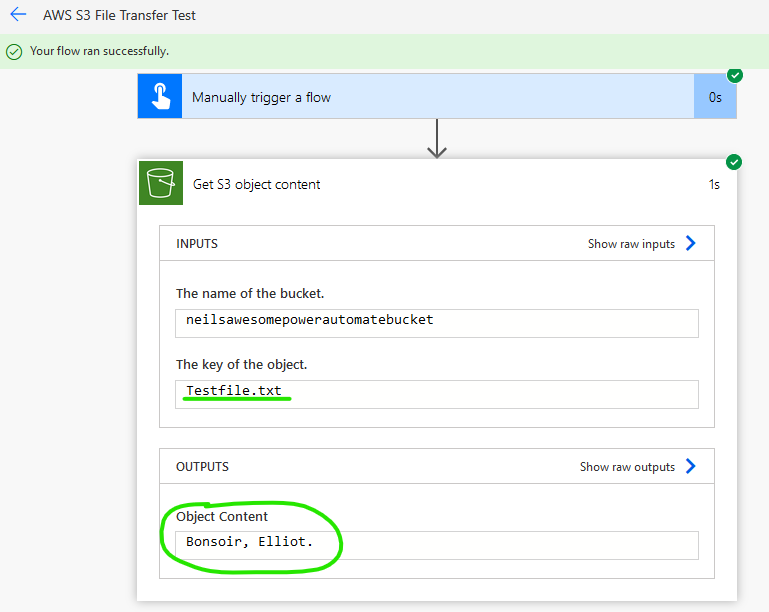

Run the Flow to test. This is a basic example. A real Flow would take the output of the Get S3 object content action and process it further

Error messages and meanings

The Amazon S3 connector is relatively simple, so not a lot can go wrong. In my experience, the most common issues are related to credentials and permissions (AWS policies).

Credential issues (Access Key Id and Access Key secret)

Symptoms of Power Automate being unable to access your S3 bucket(s) due to an incorrect Access Key or Secret can include the following error message:

Action 'Get_S3_object_content' failed: AWS denied access with the credentials

provided in the connection...

If you encounter this message, ensure your access key and access key secret are correct. Note, this can also indicate a permission issue (more below).

Permission issues (AWS IAM policy)

Symptoms of Power Automate being unable to access your S3 bucket(s) due to permission issues (as defined in your AWS IAM security policy) can include the following error messages:

There's a problem: The dynamic invocation request failed with error:

{ "status": 403, "message": "AWS denied access with the credentials

provided in the connection...

This can mean that Power Automate is unable to enumerate the list of buckets in your AWS S3 account. If you encounter this message, ensure your AWS policy includes a properly defined s3:ListAllMyBuckets action.

Action 'Get_S3_object_content' failed: AWS denied access with the credentials

provided in the connection...

This can mean that Power Automate does not have permission to read files from buckets in your AWS S3 account. If you encounter this message, ensure your AWS policy includes properly defined s3:ListBucket, s3:GetBucketLocation, s3:GetObject, and s3:GetObjectAcl actions.

Content issues

Symptoms of Power Automate being unable to access specific files in S3 bucket(s) due to an incorrect “key” or file name can include the following error message:

Action 'Get_S3_object_content' failed: The object key was not found in the S3 bucket...

If you encounter this message, ensure you are pointed to the correct S3 bucket and that the file you are attempting to retrieve in your Flow exists in that bucket (with the name you specified).

Power Automate limit issues

Symptoms of Power Automate hitting a limit when accessing specific (probably large) files in S3 bucket(s) can include the following error messages:

Flow run timed out. Please try again.

Action 'Get_S3_object_content' failed: BadGateway...

This stream does not support seek operations.

If you encounter this message, ensure your AWS S3 connector usage is within the limits described in Limits for automated, scheduled, and instant flows.

Closing thoughts

If I needed read access (to pull files/content from S3 bucket storage using Power Automate), I would opt for the native Amazon S3 connector every time (provided I already owned a Power Automate license). I speculate that write support is in the pipeline. As noted, the Amazon S3 Flow Connector became a Premium connector once it was out of preview. That said, the overall cost of a Power Automate license for a single user is lower than the cost of Couchdrop (more below). Couchdrop’s standard license is cheaper at face value but requires a five-user commitment.

If I needed write access to Amazon S3 buckets today, I would still opt for Couchdrop, even though there is a nominal cost. More on that here.

I hope this helps, and please share your experiences and thoughts surrounding the Amazon S3 Connector for Flow in the comments.